STORY WRITTEN FOR CBS NEWS & USED WITH PERMISSION

Processing images from the camera aboard NASA’s Juno spacecraft orbiting Jupiter has turned into a cottage industry of sorts, as rank amateurs, accomplished artists and experienced researchers turn relatively drab “raw” images into shots ranging from whimsical to spectacular and everything in between.

The question is, how accurately do they reflect reality, and is there any way for the casual observer to judge the result?

Unlike other NASA spacecraft, the JunoCam imager aboard the Juno spacecraft was added to the mission primarily for public outreach. Its pictures have no bearing on the mission’s scientific objectives, which rely on a suite of eight other instruments to study Jupiter’s interior structure, its gravity and magnetic fields and its immediate environment.

JunoCam’s images are only lightly processed by the camera’s builder — Malin Space Science Systems of San Diego — and immediately posted on line. What happens after that is up to the public.

“Once it’s in their hands, we have no control, nor do we want to exert any, over what they do with the data,” said Candy Hansen, a senior scientist at the Planetary Science Institute and the JunoCam instrument lead. “So we have gotten everything from careful scientific-type processing to incredibly whimsical works of art. So it’s a little bit, for you, a buyer-beware situation.”

Even so, she said, “we’re all in, in the sense that I don’t have a team of scientists and image processors waiting in the wings in case the public doesn’t show up. We don’t have a budget, we don’t have staff or anything like that. So we are entirely, 100 percent, relying on the public. And some of them have done fabulous work.”

Juno is the first spacecraft to be sent into an orbit around Jupiter’s poles, and JunoCam was designed primarily to capture detailed images of the planet’s heretofore unseen polar regions.

Because of weight, volume and power restrictions, the spacecraft could not support an advanced telescopic camera. Instead, it was equipped with a relatively simple imager with what amounts to a fish-eye lens. Malin Space Science Systems provided a similar camera to photograph the Curiosity rover’s descent to Mars.

While JunoCam is not as powerful as the sophisticated telescopes and sensors launched aboard other NASA probes, Juno’s elliptical orbit carries it closer to Jupiter than any other spacecraft, within a few thousand miles of the giant planet’s cloud tops. As a result, JunoCam’s wide-angle views provide exceptional detail and more context than more powerful narrow-angle instruments.

But how realistic are the public’s interpretations of JunoCam images? With other NASA spacecraft, the viewer can have confidence the photos were processed and reviewed by scientifically competent team members and that the images reflect some sort of scientific reality.

With public processing, as Hansen said, it’s more a case of buyer beware, and the relatively bland raw images lend themselves to Photoshop-type manipulation. To Hansen, the line between a scientifically accurate image and one that takes liberties with the data is “the minute you depart from true color.”

“The minute you start making the blue a little bluer and the red a little redder, now you’ve enhanced the color. And when you really go to the sort of wild ends of the color palette, then I would call it exaggerated. If you’re just plain making up things, then it’s false color.

So should viewers wanting to learn more about Jupiter prefer realistic lighting and color to enhanced or exaggerated images?

“Let me argue against that,” she said. “Our human eye-brain combination is better at seeing details that are there when you exaggerate it a bit, when you enhance it a bit. The details, you can see (them) if you know what you’re looking for in the true color images. But it’s so subtle, it’s really, like, washed out. I would say we learn a lot by looking at enhanced color images because it pops more to the eye-brain combo.”

Raw images from JunoCam are posted on a website Hansen helps manage. Each raw image includes the same view shot in green, blue and red filters and then a slightly processed color view that is a combination of all three. The public can download those images, process them in a wide variety of ways and upload the results back to the website.

As long as the processed images relate to Jupiter, and don’t contain unrelated or objectionable material, they are re-posted and available for anyone to download. All are in the public domain, although uploaders can opt to restrict commercial usage.

Hansen cited several processors for their work, including Björn Jónsson, who she said goes to great lengths to ensure realistic lighting and color, and Seán Doran, a graphic artist whose enhanced images are “incredibly beautiful, they are drop-dead gorgeous.”

Gerald Eichstädt, a mathematician and software developer, devised code to ensure uniform lighting across an image, Hansen said, adding “I’m urging him to write up an actual science paper and get some credit for all that work, at least in the scientific community.”

In an email exchange with CBS News, Doran said his images are based on Eichstädt’s work, adding “my aim is to provide an aesthetic enhancement to what he has done.”

“I use a range of techniques in Photoshop to extract detail and enhance subtleties in the source image,” he wrote. “This can develop into quite a large set of actions and layers each with different non-destructive adjustments and masks. These layers are treated with various blend modes to provide finer control in mixing toward the final image.

“I also use exposure settings to draw the eye and give volume to the image. Knowing when to stop is intuitive, and in some cases I will scrap what I have done and start again.”

He said he was “inspired” by the work of Jönsson and Justin Cowart, “whose images provide realistic renderings of Jupiter. Their work is beautiful.”

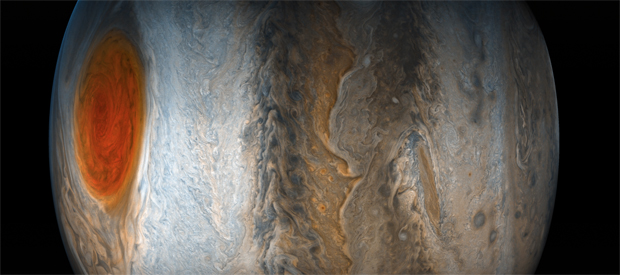

Last Monday, Juno flew over Jupiter’s Great Red Spot for the first time, a highly anticipated event. The Great Red Spot is the largest, most powerful storm in the solar system, stretching more than 10,000 miles across. Within minutes of the first raw images being posted, image processors around the world began uploading their interpretations.

“People must have been just sitting there waiting with Photoshop open!” Hansen laughed. “Within 45 minutes, I already had a queue to approve. This has really been fun.”

Said Doran: “We are only at the start of coming to grips with this data, and in time I expect to see very many beautiful and harmonious treatments.”